Part 1

My first installation was created to help people to physically express and differentiate their emotions with facial recognition. The installation provided people with feedback depending on the three emotions they were able to express: anger, sadness and happiness. In this installation, interactors were able to switch between different facial expressions depending on how they felt. This also allowed them to see on the computer screen how their facial expression changed depending on the emotion they were expressing. This was beneficial because they were able to relate their feelings to a visual stimulant of the emotion they were expressing and as well as with the coordinated light motif that accompanied it. This installation was a constructive introduction to presenting these three emotions.

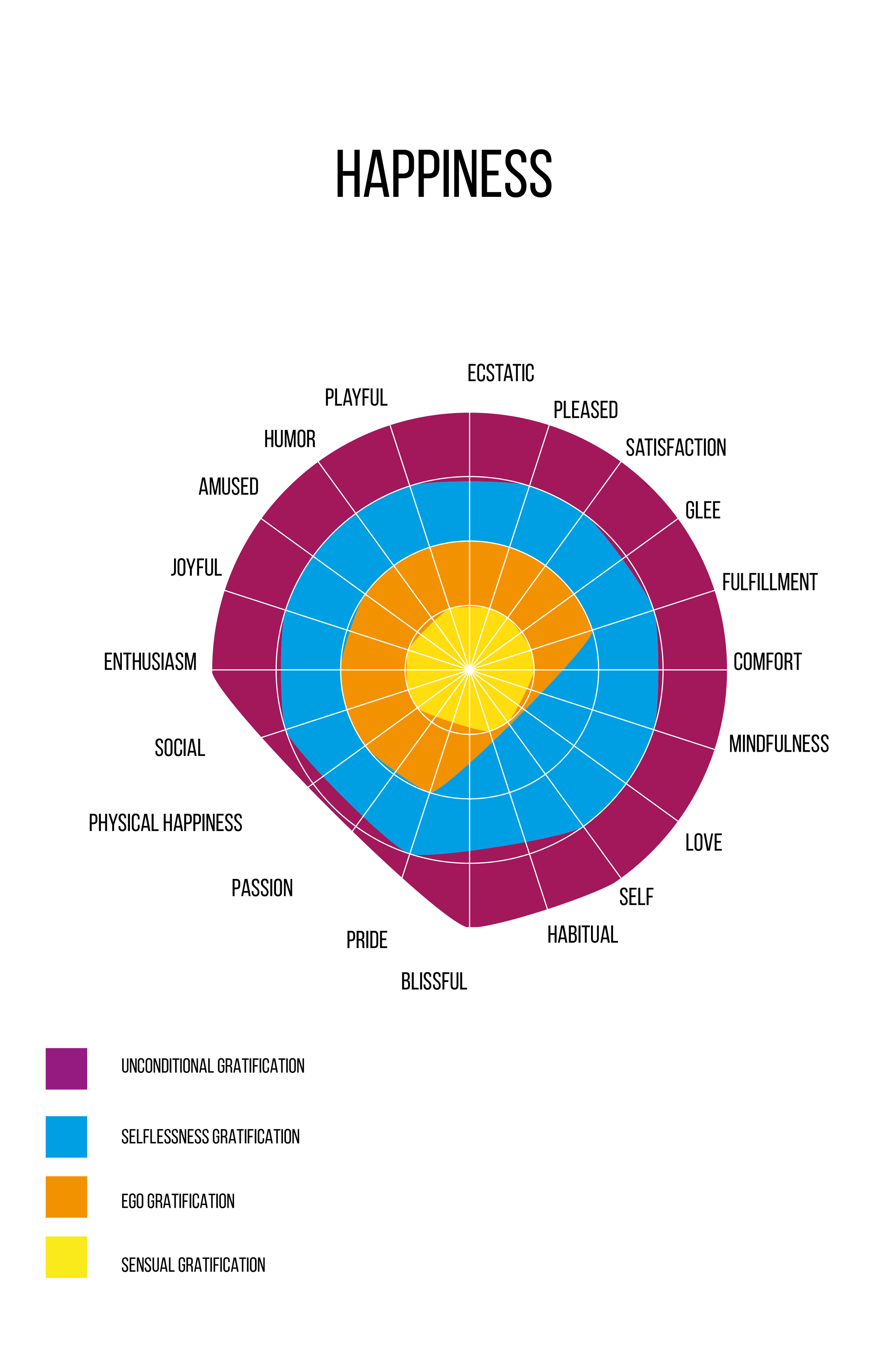

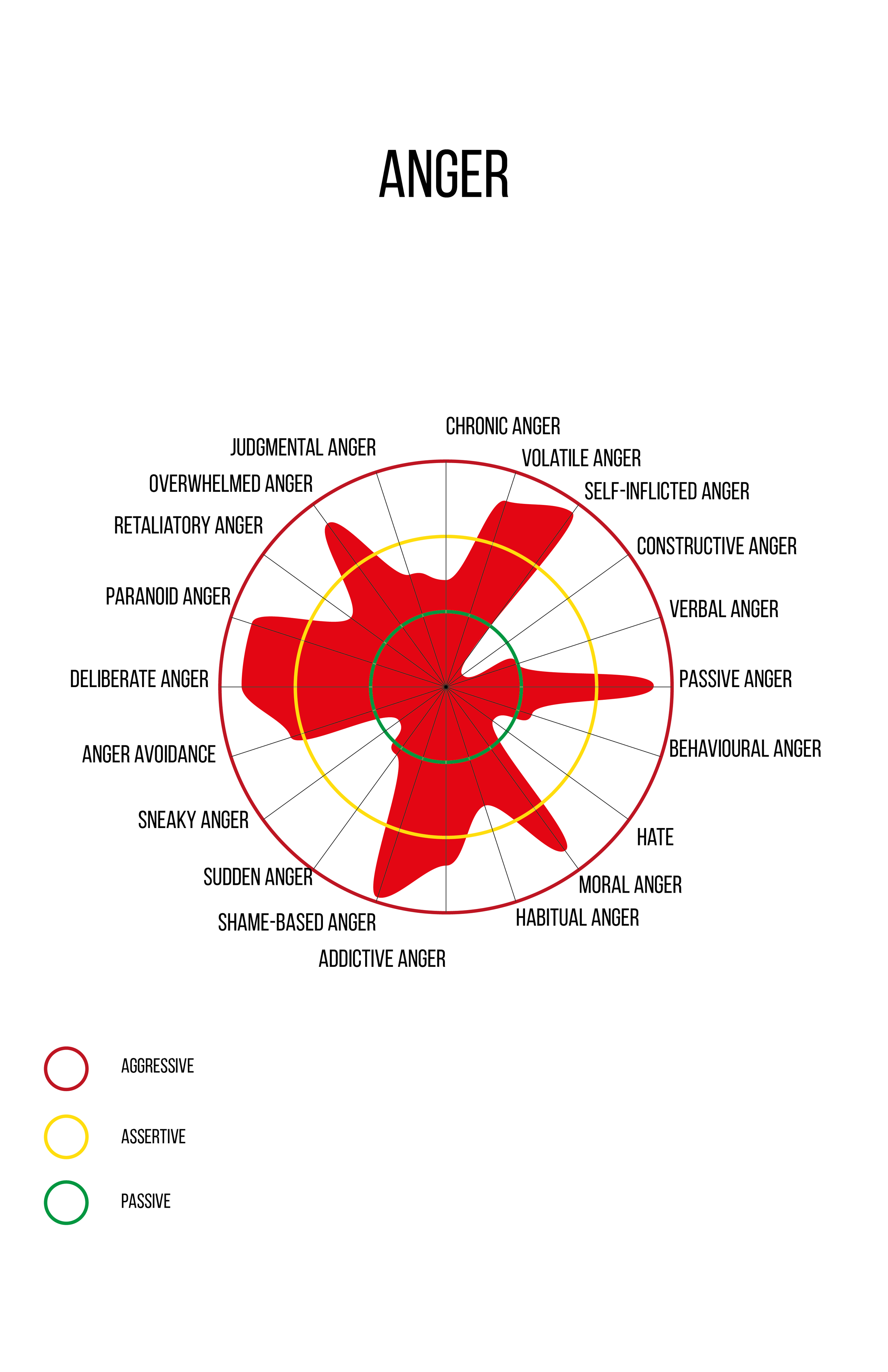

““Emotions,” wrote Aristotle (384–322 bce), “are all those feelings that so change men as to affect their judgements, and that are also attended by pain or pleasure (Solomon, 2015).” According to Solomon (2015) is his journal, Emotion Psychology, emotions are a heterogeneous category that encompasses a wide variety of important psychological phenomena. Some emotions are very specific, as they concern a particular person, object, or situation. Others, such as distress, joy, or depression, can be very general. To first clarify, in my installations I will be only focusing on three broad emotions: happiness, anger and sadness. Emotions can be very complex because they come in many different variations of feelings and so because of this I want to focus on these three emotions so that my interactors are not confused by the complexity of what they may be feeling.

What I found in my research is that within my installation I am focusing on physical emotional expressions. This is a very important category of emotion to understand with respect to my installation because researchers have found that not all emotions can be reflected in our facial expressions. This means that within my installation people may be able to only express some types of happiness, anger or sadness since emotions are very complex. According to many theorists, basic emotions, such as fear, anger, joy, surprise and sadness, consist of an “affect program” which is a “complex set of facial expressions, vocalizations, and autonomic and skeletal responses”(Solomon, 2015). This indicates that using facial recognition in my installation can be very beneficial to the interactors in understanding what they are feeling. They will be “seeing” their own facial expression in the act of expressing those specific emotions stated above. What I found interesting in Solomon’s paper is that in the behavioural category of emotion, the “affect program” also demonstrated that conscious or unconscious gestures, postures and mannerisms can be either spontaneous or deliberate. Deliberate behaviour can be thought of as just an “expression” because of the intervening conscious activity it involves. This is interesting because this means that since the interactors are conscious of expressing their emotion in the interactive installation, they are only manifesting an expression of what they are really feeling. However, I can argue that once an interactor manifests his or her expression to the screen, he or she can genuinely start to really feel their emotion and thus start to cry, for example, which can become an unconscious act that they did not expect from interacting with the installation.